2/3/17

Create multi node Kubernetes cluster locally

Only way to test out kubernetes locally without having to worry about setup process was using minikube, then ubuntu juju came up with the ability to deploy multi node kubernetes setup in ubuntu based environments. Now there is a new project from Mirantis which gives users the ability to create multi node kubernetes clusters in any os where you can run docker. It would work on local machine and in remote machine as well. This would be useful to understand how kubernetes clusters are created on production environments and for kubernetes development.

This uses DIND (docker in docker) and kubeadm to provision kubernetes, this would not be a good option if your filesystem is ‘btrfs’.

setup steps are very straight forward and only have to download the provisioning script relevant to the kubernetes version to be configured and run on your host.

prerequists : docker engine 1.12+ , kubectl

check docker version

:~$ docker version

Client:

Version: 17.05.0-ce

API version: 1.29

Go version: go1.7.5

Git commit: 89658be

Built: Thu May 4 22:10:54 2017

OS/Arch: linux/amd64Server:

Version: 17.05.0-ce

API version: 1.29 (minimum version 1.12)

Go version: go1.7.5

Git commit: 89658be

Built: Thu May 4 22:10:54 2017

OS/Arch: linux/amd64

Experimental: falseAPI version has to be higher than 1.12

wget https://cdn.rawgit.com/Mirantis/kubeadm-dind-cluster/master/fixed/dind-cluster-v1.8.shthen change the permissions

chmod 700 dind-cluster-v1.8.shexecute the script to setup cluster

./dind-cluster-v1.8.sh upusage options

usage:

./dind-cluster-v1.8.sh up

./dind-cluster-v1.8.sh reup

./dind-cluster-v1.8.sh down

./dind-cluster-v1.8.sh init kubeadm-args...

./dind-cluster-v1.8.sh join kubeadm-args...

./dind-cluster-v1.8.sh clean

./dind-cluster-v1.8.sh e2e [test-name-substring]

./dind-cluster-v1.8.sh e2e-serial [test-name-substring]

./dind-cluster-v1.8.sh dump

./dind-cluster-v1.8.sh dump64

./dind-cluster-v1.8.sh split-dump

./dind-cluster-v1.8.sh split-dump64output from cluster configuration

* Making sure DIND image is up to date

v1.8: Pulling from mirantis/kubeadm-dind-cluster

952132ac251a: Pull complete

82659f8f1b76: Pull complete

c19118ca682d: Pull complete

8296858250fe: Pull complete

24e0251a0e2c: Pull complete

2545d638d973: Pull complete

e0b45d7ea196: Pull complete

8d7d40f3e602: Pull complete

216f5a138844: Pull complete

c71de27d6b60: Pull complete

352d5aa5dfd1: Pull complete

e6b3ec6d10dc: Pull complete

d576f0c39d8a: Pull complete

93892148e892: Pull complete

3a2a24fee2f8: Pull complete

36aba2bf4c04: Pull complete

677c259304fd: Pull complete

37b4174ef952: Pull complete

45edc9e8c9c3: Pull complete

44be12e55e52: Pull complete

9d50bbdbeba1: Pull complete

998d505d8f97: Pull complete

b100e6b528dd: Pull complete

63bbd3b9ddcd: Pull complete

Digest: sha256:d70c4acc968fd209969c612ef01ffd6737f7eee63d632162eaed992b97c439b9

Status: Downloaded newer image for mirantis/kubeadm-dind-cluster:v1.8

/home/ukumaa1/.kubeadm-dind-cluster/kubectl-v1.8.1: OK

* Starting DIND container: kube-master

* Running kubeadm: init --config /etc/kubeadm.conf --skip-preflight-checks

Initializing machine ID from random generator.

Synchronizing state of docker.service with SysV init with /lib/systemd/systemd-sysv-install...

Executing /lib/systemd/systemd-sysv-install enable docker

Loaded image: gcr.io/google_containers/kube-discovery-amd64:1.0

Loaded image: gcr.io/google_containers/pause-amd64:3.0

Loaded image: gcr.io/google_containers/etcd-amd64:2.2.5

Loaded image: mirantis/hypokube:base

Loaded image: gcr.io/google_containers/etcd-amd64:3.0.17

Loaded image: gcr.io/google_containers/kubedns-amd64:1.7

Loaded image: gcr.io/google_containers/exechealthz-amd64:1.1

Loaded image: gcr.io/google_containers/kube-dnsmasq-amd64:1.3

Loaded image: gcr.io/google_containers/etcd:2.2.1real 0m13.584s

user 0m0.720s

sys 0m0.684s

Sending build context to Docker daemon 236 MB

Step 1 : FROM mirantis/hypokube:base

---> 2d71116d5afc

Step 2 : COPY hyperkube /hyperkube

---> d9b0ccd6b34d

Removing intermediate container 30997ec5d13e

Successfully built d9b0ccd6b34d

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /lib/systemd/system/kubelet.service.

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[init] Using Kubernetes version: v1.8.4

[init] Using Authorization modes: [Node RBAC]

[preflight] Skipping pre-flight checks

[kubeadm] WARNING: starting in 1.8, tokens expire after 24 hours by default (if you require a non-expiring token use --token-ttl 0)

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [kube-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.192.0.2]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests"

[init] This often takes around a minute; or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 24.502770 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node kube-master as master by adding a label and a taint

[markmaster] Master kube-master tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: 6507ac.a6b2cfac737576ff

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: kube-dns

[addons] Applied essential addon: kube-proxyYour Kubernetes master has initialized successfully!To start using your cluster, you need to run (as a regular user):mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

http://kubernetes.io/docs/admin/addons/You can now join any number of machines by running the following on each node

as root:kubeadm join --token 6507ac.a6b2cfac737576ff 10.192.0.2:6443 --discovery-token-ca-cert-hash sha256:7d39227ea2e2e58f1e3f11bf3bb99ae960f22c8c76b927450ea0c3d95041adcereal 0m31.304s

user 0m3.556s

sys 0m0.156s

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

daemonset "kube-proxy" configured

No resources found

* Setting cluster config

Cluster "dind" set.

Context "dind" created.

Switched to context "dind".

* Starting node container: 1

* Starting DIND container: kube-node-1

* Node container started: 1

* Starting node container: 2

* Starting DIND container: kube-node-2

* Node container started: 2

* Joining node: 1

* Running kubeadm: join --skip-preflight-checks --token 6507ac.a6b2cfac737576ff 10.192.0.2:6443 --discovery-token-ca-cert-hash sha256:7d39227ea2e2e58f1e3f11bf3bb99ae960f22c8c76b927450ea0c3d95041adce

* Joining node: 2

* Running kubeadm: join --skip-preflight-checks --token 6507ac.a6b2cfac737576ff 10.192.0.2:6443 --discovery-token-ca-cert-hash sha256:7d39227ea2e2e58f1e3f11bf3bb99ae960f22c8c76b927450ea0c3d95041adce

Initializing machine ID from random generator.

Initializing machine ID from random generator.

Warning: Stopping docker.service, but it can still be activated by:

docker.socket

Synchronizing state of docker.service with SysV init with /lib/systemd/systemd-sysv-install...

Executing /lib/systemd/systemd-sysv-install enable docker

Synchronizing state of docker.service with SysV init with /lib/systemd/systemd-sysv-install...

Executing /lib/systemd/systemd-sysv-install enable dockerreal 0m23.338s

user 0m0.884s

sys 0m0.628s

Loaded image: gcr.io/google_containers/kube-discovery-amd64:1.0

Loaded image: gcr.io/google_containers/pause-amd64:3.0

Loaded image: gcr.io/google_containers/etcd-amd64:2.2.5

Loaded image: mirantis/hypokube:base

Loaded image: gcr.io/google_containers/etcd-amd64:3.0.17

Loaded image: gcr.io/google_containers/kubedns-amd64:1.7

Loaded image: gcr.io/google_containers/exechealthz-amd64:1.1

Loaded image: gcr.io/google_containers/kube-dnsmasq-amd64:1.3

Loaded image: gcr.io/google_containers/etcd:2.2.1

Loaded image: gcr.io/google_containers/kube-discovery-amd64:1.0

Loaded image: gcr.io/google_containers/pause-amd64:3.0

Loaded image: gcr.io/google_containers/etcd-amd64:2.2.5

Loaded image: mirantis/hypokube:base

Loaded image: gcr.io/google_containers/etcd-amd64:3.0.17

Loaded image: gcr.io/google_containers/kubedns-amd64:1.7

Loaded image: gcr.io/google_containers/exechealthz-amd64:1.1

Loaded image: gcr.io/google_containers/kube-dnsmasq-amd64:1.3

Loaded image: gcr.io/google_containers/etcd:2.2.1real 0m23.416s

user 0m0.836s

sys 0m0.696s

Sending build context to Docker daemon 236 MB

Step 1 : FROM mirantis/hypokube:baseon 222.3 MB

---> 2d71116d5afc

Step 2 : COPY hyperkube /hyperkube

Sending build context to Docker daemon 236 MB

Step 1 : FROM mirantis/hypokube:base

---> 2d71116d5afc

Step 2 : COPY hyperkube /hyperkube

---> 60938d2b7914

Removing intermediate container 48ccfde421ca

Successfully built 60938d2b7914

---> 8f66f8a1db37

Removing intermediate container 2acf4d950472

Successfully built 8f66f8a1db37

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /lib/systemd/system/kubelet.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /lib/systemd/system/kubelet.service.

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[preflight] Skipping pre-flight checks

[discovery] Trying to connect to API Server "10.192.0.2:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://10.192.0.2:6443"

[discovery] Requesting info from "https://10.192.0.2:6443" again to validate TLS against the pinned public key

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[preflight] Skipping pre-flight checks

[discovery] Trying to connect to API Server "10.192.0.2:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://10.192.0.2:6443"

[discovery] Requesting info from "https://10.192.0.2:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.192.0.2:6443"

[discovery] Successfully established connection with API Server "10.192.0.2:6443"

[bootstrap] Detected server version: v1.8.1

[bootstrap] The server supports the Certificates API (certificates.k8s.io/v1beta1)

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.192.0.2:6443"

[discovery] Successfully established connection with API Server "10.192.0.2:6443"

[bootstrap] Detected server version: v1.8.1

[bootstrap] The server supports the Certificates API (certificates.k8s.io/v1beta1)Node join complete:

* Certificate signing request sent to master and response

received.

* Kubelet informed of new secure connection details.Run 'kubectl get nodes' on the master to see this machine join.real 0m0.698s

user 0m0.348s

sys 0m0.060sNode join complete:

* Certificate signing request sent to master and response

received.

* Kubelet informed of new secure connection details.Run 'kubectl get nodes' on the master to see this machine join.real 0m0.713s

user 0m0.376s

sys 0m0.040s

* Node joined: 1

* Node joined: 2

* Deploying k8s dashboard

deployment "kubernetes-dashboard" created

service "kubernetes-dashboard" created

clusterrolebinding "add-on-cluster-admin" created

* Patching kube-dns deployment to make it start faster

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

deployment "kube-dns" configured

* Taking snapshot of the cluster

deployment "kube-dns" scaled

deployment "kubernetes-dashboard" scaled

pod "kube-proxy-ddjwk" deleted

pod "kube-proxy-s69fx" deleted

No resources found.

* Waiting for kube-proxy and the nodes

.................[done]

* Bringing up kube-dns and kubernetes-dashboard

deployment "kube-dns" scaled

deployment "kubernetes-dashboard" scaled

.................................[done]

NAME STATUS ROLES AGE VERSION

kube-master Ready master 2m v1.8.1

kube-node-1 Ready <none> 58s v1.8.1

kube-node-2 Ready <none> 1m v1.8.1

* Access dashboard at: http://localhost:8080/uicheck nodes in cluster

kubectl get nodes

NAME STATUS AGE VERSION

kube-master Ready 6m v1.8.1

kube-node-1 Ready 4m v1.8.1

kube-node-2 Ready 5m v1.8.1check out the repository in github : https://github.com/Mirantis/kubeadm-dind-cluster

installation instructions for kubectl : https://kubernetes.io/docs/tasks/tools/install-kubectl/

Recommended Posts

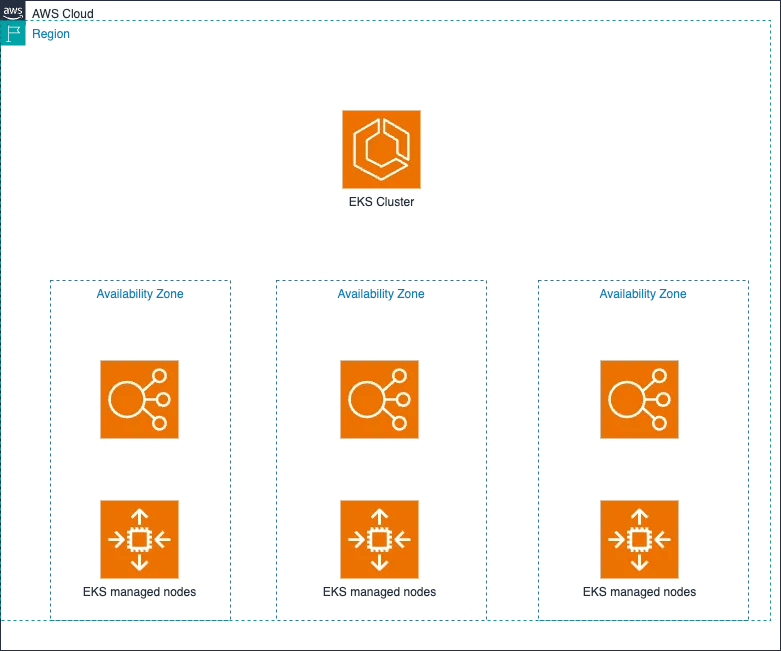

In this article, we’ll explore how to leverage Terraform to streamline the process of creating an AWS EKS cluster and integrating it with an Application Load Balancer (ALB), ensuring a scalable and resilient infrastructure for cloud native applications.

Publication Date

3/31/2024

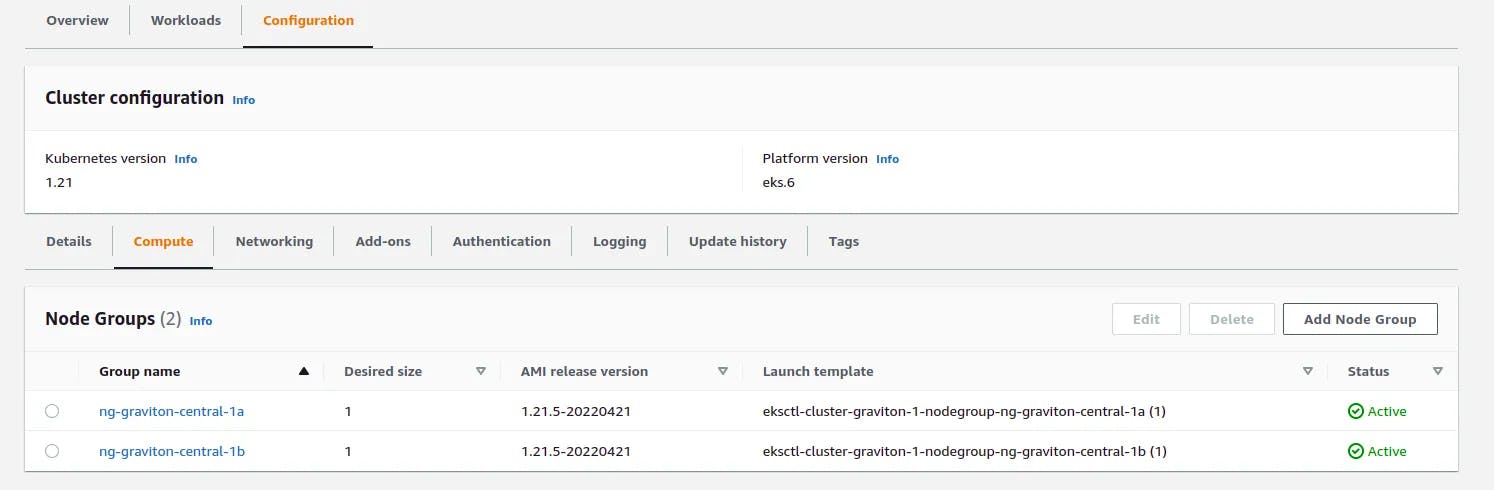

AWS introduced AWS Graviton series of processors supported EKS since 2019 and this gives a good opportunity to reduce cost and gain better performance.

Publication Date

4/29/2022